Real-time, offline, and cross-platform lip sync for MetaHuman and custom characters. Three models to choose from: Standard for universal compatibility, Realistic for premium visual quality, and Mood-Enabled for emotional facial animation. Universal language support - works with any spoken language.

Choose between our Standard model for broad compatibility and efficient performance, or the Realistic model family for enhanced visual fidelity and emotional expression specifically optimized for MetaHuman characters.

Platforms: Windows, Android, Quest

81 facial controls for natural mouth movements

Adds 12 emotional moods with configurable intensity

Platforms: Windows, Mac, iOS, Linux, Android, Quest

Speech-to-Speech Demo

Full AI workflow: speech recognition + chatbot + TTS + lip sync

Basic Lip Sync Demo

Microphone input, audio files, and TTS workflows

Works with any spoken language including English, Spanish, French, German, Japanese, Chinese, Korean, Russian, Italian, Portuguese, Arabic, Hindi, and literally any other language. The plugin analyzes audio phonemes directly, making it language-independent.

Runtime MetaHuman Lip Sync provides a comprehensive system for dynamic lip sync animation, enabling characters to speak naturally in response to audio input from various sources. Despite its name, the plugin works with a wide range of characters beyond just MetaHumans.

Generate lip sync animations in real-time from microphone input or pre-recorded audio

Works with MetaHuman, Daz Genesis 8/9, Reallusion CC3/CC4, Mixamo, ReadyPlayerMe, and more

Supports FACS-based systems, Apple ARKit, Preston Blair phoneme sets, and 3ds Max phoneme systems

12 mood types with configurable intensity for enhanced character expressiveness

Get up to speed quickly with our comprehensive video tutorials covering Standard, Realistic, and Mood-Enabled models, various audio sources, and integration techniques.

Complete workflow demonstration: speech recognition, AI chatbot integration, text-to-speech, and real-time lip sync animation in one seamless experience.

Learn to use the Realistic model with ElevenLabs & OpenAI for premium quality lip sync.

Real-time lip sync from microphone input using the enhanced Realistic model.

Efficient real-time lip sync using the Standard model for broad compatibility.

Offline lip sync using the Standard model with local TTS for complete independence.

Combine Standard model efficiency with high-quality external TTS services.

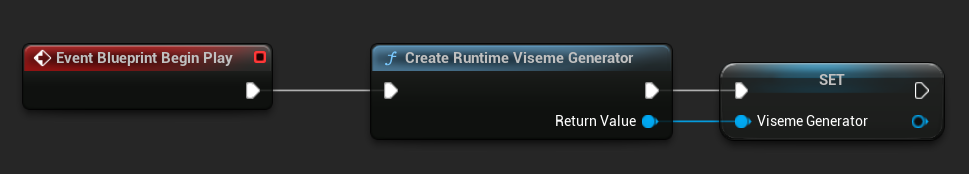

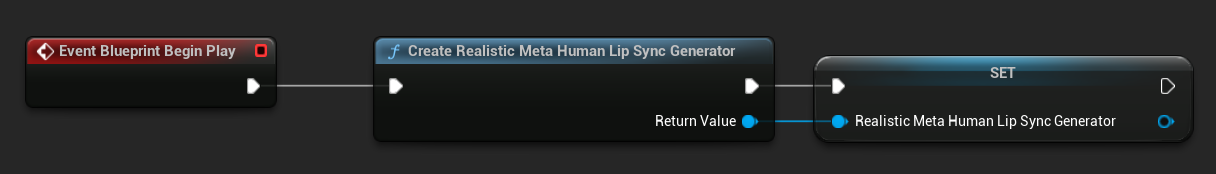

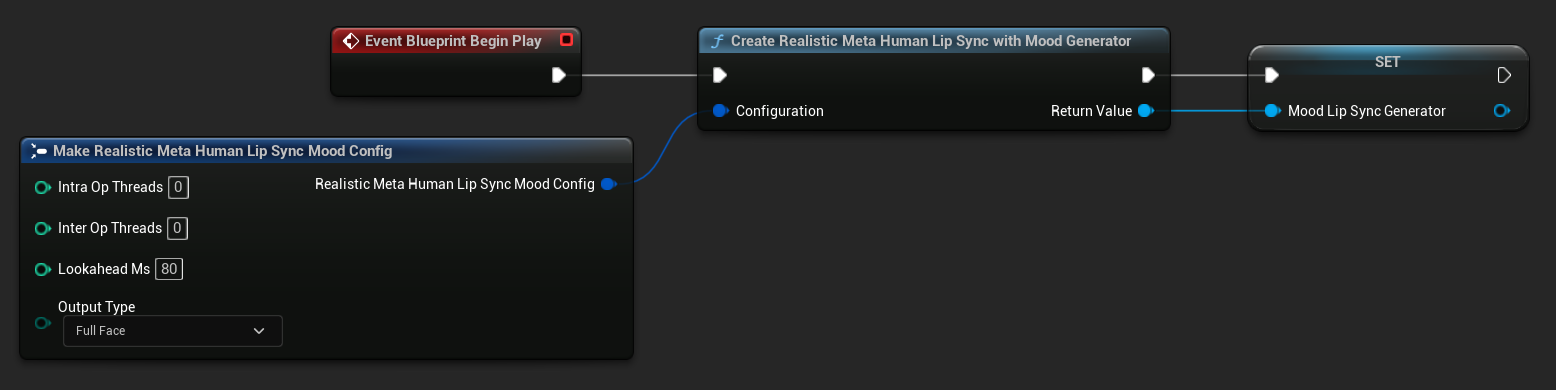

Set up lip sync animation with just a few Blueprint nodes. Choose between Standard, Realistic, and Mood-Enabled generators based on your project needs.

Advanced emotional expression control with 12 different mood types, configurable intensity, and selectable output controls.

Three optimization levels for Realistic models, configurable processing parameters, and platform-specific optimizations.

Best performance for real-time applications

Balanced quality and performance

Highest quality for cinematic use

Maps to character morph targets: Sil, PP, FF, TH, DD, KK, CH, SS, NN, RR, AA, E, IH, OH, OU

Generate direct MetaHuman facial controls without predefined viseme poses

Full Face or Mouth-Only modes with emotional context

Float PCM from any source (microphone, TTS, files)

Language-independent audio processing

Generates visemes or facial controls with optional mood context

Applied to character with smooth transitions

UE 5.0 - 5.7

Extension plugin required (included in documentation)

Runtime Audio Importer plugin

Runtime MetaHuman Lip Sync works seamlessly with other plugins to create complete audio, speech, and animation solutions for your Unreal Engine projects.

Import, stream, and capture audio at runtime to drive lip sync animations. Process audio from files, memory, or microphone input.

Learn moreAdd speech recognition to create interactive characters that respond to voice commands while animating with lip sync.

Learn moreGenerate realistic speech from text offline with 900+ voices and animate character lips in response to the synthesized audio.

Learn moreCreate AI-powered talking characters that respond to user input with natural language and realistic lip sync. Perfect for all models.

Learn moreCombine Runtime MetaHuman Lip Sync with our other plugins to create fully interactive characters that can listen, understand, speak, and animate naturally with emotions. From voice commands to AI-driven conversations with premium visual quality, our plugin ecosystem provides everything you need for next-generation character interaction in any language.

Despite its name, Runtime MetaHuman Lip Sync works with a wide range of characters beyond just MetaHumans. The Standard model provides flexible viseme mapping for various character systems.

The Standard model supports mapping between different viseme standards, allowing you to use characters with various facial animation systems:

Map ARKit blendshapes to visemes

Map Action Units to visemes

Classic animation mouth shapes

Standard 3ds Max phonemes

Get started quickly with our detailed documentation covering Standard, Realistic, and Mood-Enabled models. From basic setup to advanced character configuration and emotional animation, we're here to help you succeed.

Step-by-step guides for all three lip sync models, MetaHumans, and custom characters

Latest tutorials covering all models and various integration methods

Get real-time help from developers and users

Contact [email protected] for tailored solutions

Demo Project Walkthrough

Bring your MetaHuman and custom characters to life with real-time lip sync animation and emotional expression. Choose from three powerful models to match your project's needs, with universal language support included.

Universal compatibility with all character types, optimized for mobile and VR platforms

Enhanced visual quality with 81 facial controls specifically for MetaHuman characters

Emotional facial animation with 12 mood types and configurable intensity settings